Blogs / Foundation Models: The Backbone of Next-Generation Artificial Intelligence

Foundation Models: The Backbone of Next-Generation Artificial Intelligence

Introduction

A medical student who has spent years learning basic sciences like anatomy, physiology, and biochemistry doesn’t need to relearn all of these subjects from scratch to specialize in heart surgery; they only need to build their specialized knowledge of cardiac surgery on top of their strong existing foundation. The same principle is happening in the world of artificial intelligence with Foundation Models.

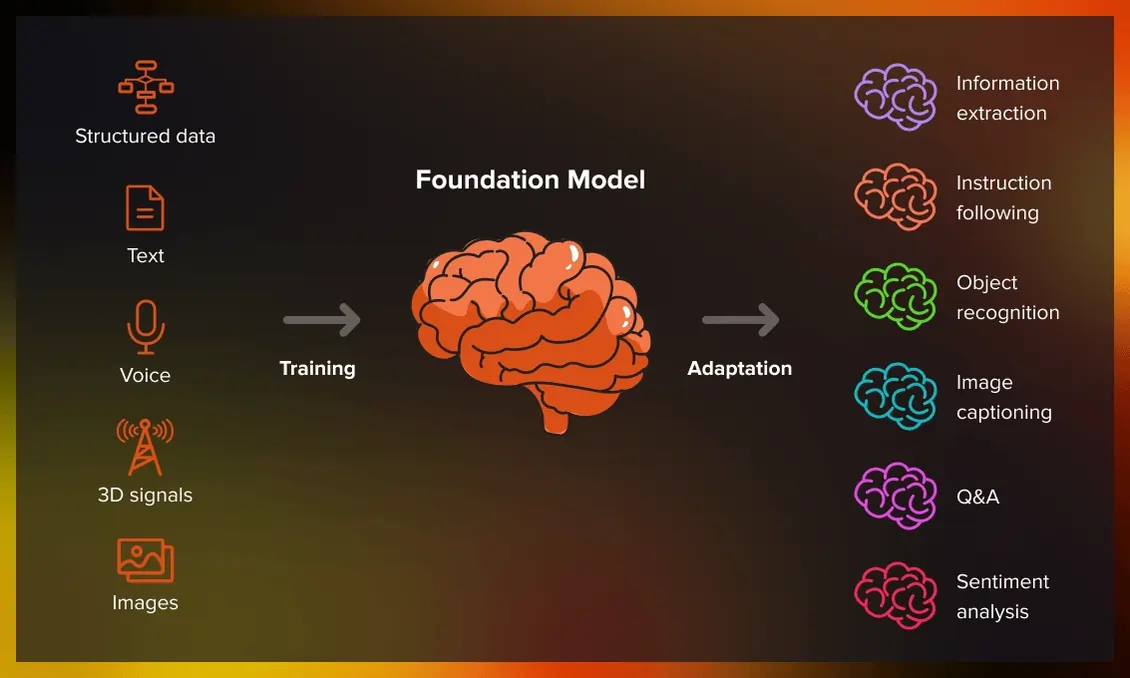

Foundation Models have sparked a revolution in AI that has completely transformed how we develop, deploy, and use intelligent systems. These models, trained on massive amounts of diverse data, acquire broad and comprehensive general knowledge that can be easily adapted and optimized for hundreds of different applications. From ChatGPT, which millions interact with daily, to medical diagnostic systems saving lives, all are built upon this technology.

In this comprehensive article, we'll deeply explore Foundation Models, their architecture, how they work, amazing applications, and the challenges facing this technology. Join us to discover the fascinating world of this transformative innovation.

What are Foundation Models?

Foundation Models refer to large, powerful machine learning models trained on enormous amounts of diverse, unlabeled data that can adapt to a wide range of different tasks. These models serve as a "foundation" or "basis" for building more specialized AI systems.

The key difference between Foundation Models and traditional machine learning models is that older models were typically trained for one specific task with labeled data. For instance, a model would be designed solely to distinguish cats from dogs and couldn't perform other tasks. But Foundation Models are like multidisciplinary scientists who can operate across various fields.

Key Features of Foundation Models

1. Massive Scale: These models typically have billions of parameters. For example, GPT-3 has 175 billion parameters, making it one of the largest neural networks in history.

2. Self-Supervised Learning: These models train without requiring manual data labeling. For instance, a language model learns language by predicting the next word in a sentence.

3. Knowledge Transfer Capability: The ability to use knowledge learned in one domain to solve problems in other domains—the concept of Transfer Learning.

4. Multi-Task Nature: A Foundation Model can be used for diverse tasks like translation, summarization, code generation, and sentiment analysis without changing its core architecture.

5. Emergence: As model size increases, new capabilities unexpectedly emerge that weren't present in smaller models.

History and Evolution of Foundation Models

The journey toward Foundation Models began in the 2010s. In 2013, Word2Vec was introduced—the first major step in learning semantic word representations. Then in 2017, the historic paper "Attention is All You Need" was published, introducing the Transformer architecture—the very architecture that forms the basis of all modern Foundation Models.

In 2018, BERT was introduced by Google, demonstrating that a pre-trained model could achieve exceptional performance across dozens of natural language processing tasks. Then came GPT-2 and GPT-3 from OpenAI, showcasing amazing text generation capabilities.

Today we're witnessing a new generation of Foundation Models that are multimodal—meaning they can work with text, images, audio, and even video. Models like GPT-4, Claude Sonnet 4.5, Gemini 2.5 Flash, and DeepSeek V3 exemplify this advanced generation.

Architecture and How Foundation Models Work

Foundation Models are typically built on the Transformer architecture. This architecture features a key mechanism called Attention Mechanism that allows the model to focus on important parts of the input.

The Pre-training Process

Pre-training is the phase where the model trains on massive general data. During this phase:

For language models: The model reads billions of texts from the internet, books, scientific papers, and other sources, attempting to predict the next word in sentences. This seemingly simple task causes the model to develop deep understanding of language, grammar, real-world knowledge, and even logical reasoning.

For vision models: The model views millions of images and learns to recognize objects, patterns, textures, and spatial relationships. Architectures like Vision Transformers (ViT) are used in this area.

For multimodal models: The model trains simultaneously on text and image data, learning to understand the relationship between these two domains. This is explored in detail in multimodal models.

The Fine-tuning Process

After pre-training, the model is fine-tuned for specific tasks. In this phase, the model trains with less but more specialized data. For example:

- A language Foundation Model can be fine-tuned for medical diagnosis and treatment

- The same model can be adjusted for financial analysis

- Or optimized for programming code generation

Modern fine-tuning techniques like LoRA and QLoRA have made this process much more efficient and reduced computational resource requirements.

Prompt Engineering: Usage Without Fine-tuning

One of the most attractive features of Foundation Models is that without any additional training, simply by carefully designing questions or instructions (Prompts), you can extract complex tasks from them. Prompt Engineering has become a critical skill for extracting the best output from Foundation Models.

Types of Foundation Models

| Model Type | Main Application | Famous Examples |

|---|---|---|

| Language Models (LLM) | Text processing & generation, conversation, translation | GPT-4, Claude, Gemini, DeepSeek |

| Vision Models | Image recognition, classification, segmentation | CLIP, DINOv2, SAM |

| Image Generation Models | Generating images from text or images | DALL-E, Midjourney, Stable Diffusion, Flux |

| Video Generation Models | Generating realistic videos | Sora, Veo, Kling |

| Audio Models | Speech recognition and generation | Whisper, AudioLM |

| Multimodal Models | Working with text, images, audio, and video | GPT-4V, Gemini Pro, Claude 3 |

Large Language Models (LLM)

Language models are the most popular type of Foundation Models. These models have been trained on billions of words and can:

- Generate text: From writing poetry and stories to producing specialized articles

- Answer questions: Like a living encyclopedia responding to any question

- Translate: Accurate and fluent translation between hundreds of languages

- Write code: From simple code to complex programs

- Summarize: Condensing lengthy texts into key bullet points

Vision Models

These models have been trained on millions of images and can:

- Recognize objects: From facial recognition to identifying diseases in medical images

- Classify images: Product categorization, quality detection, etc.

- Perform segmentation: Precise separation of objects in images

Real applications of these models can be explored in AI image processing and machine vision.

Generative Models

These models using techniques like Diffusion Models and GAN can:

- Create artistic images: Midjourney, Flux, and GPT Image-1

- Generate realistic videos: Sora 2, Kling, and Veo 3

- Create creative content: For advertising, music, and art

Amazing Applications of Foundation Models

1. Medicine and Healthcare

Imagine a doctor available 24/7 who has read millions of medical papers and can analyze MRI and CT-Scan images with exceptional accuracy. Foundation Models have made this possible:

- Early cancer detection: Vision models can identify tumors in early stages invisible to the human eye

- New drug discovery: Models can simulate millions of chemical compounds and find promising drugs—explored in AI drug discovery

- Diagnosis from symptoms: A language model can analyze patient symptoms and suggest probable diagnoses

- Genetic research: Helping understand genetic diseases through human genetics and AI

2. Education and Learning

A personal teacher designing unique learning programs for each student:

- Personalized learning: The model understands where you're weak and provides appropriate exercises

- Instant translation: Students can read scientific resources in any language

- Educational content generation: Automatic generation of tests, questions, and detailed answers

- Teacher assistance: Automatic assignment evaluation and constructive feedback

The broad impact of this technology can be studied in AI and the future of education.

3. Business and Management

- Customer analysis: Deep understanding of customer needs and behavior to improve user experience

- Customer service automation: Intelligent chatbots that can answer 95% of questions

- Market forecasting: Financial prediction models analyzing future trends

- Supply chain optimization: Demand forecasting and inventory management

- Smart recruitment: Using AI in recruitment to find the best candidates

4. Creativity and Art

- Graphic design: Generating logos, posters, and advertising images in seconds

- Music generation: Creating original songs in various styles

- Writing: Helping writers with content creation

- Fashion design: AI in fashion industry for trend prediction and clothing design

5. Security and Defense

- Cyber threat detection: Cybersecurity with AI

- Security data analysis: Processing millions of security events instantly

- Facial recognition: Facial recognition for security

- Crisis prediction: Helping with crisis management

6. Transportation and Automotive

- Self-driving cars: Used in automotive industry

- Route optimization: Finding the best route considering traffic and weather conditions

- Predictive maintenance: Detecting damaged parts before failure

Comparing Foundation Models with Other Approaches

| Feature | Traditional Models | Foundation Models |

|---|---|---|

| Training Data Volume | Thousands to millions of samples | Billions of samples |

| Number of Parameters | Thousands to millions | Billions of parameters |

| Training Cost | Low to medium | Very high (millions of dollars) |

| Task Specialization | One specific task | Multiple diverse tasks |

| Labeled Data Requirement | Yes, large volume | No (self-supervised) |

| Knowledge Transfer Capability | Limited | Excellent |

| Performance on New Tasks | Needs retraining | Quick with minimal fine-tuning |

| Accessibility | Requires internal development | APIs and ready-made tools |

Foundation Model Optimization Techniques

1. Knowledge Distillation

Knowledge Distillation is a technique where a large model (teacher) transfers its knowledge to a smaller model (student). This results in:

- Faster model execution

- Reduced memory requirements

- Lower deployment costs

2. Quantization and Pruning

These techniques reduce model size without significantly decreasing accuracy:

- Quantization: Reducing number precision from 32-bit to 8-bit or even 4-bit

- Pruning: Removing low-importance weights from the network

These are explained in detail in AI optimization.

3. Mixture of Experts (MoE)

Mixture of Experts is an architecture where only part of the model activates for each input, reducing computational costs.

4. Flash Attention

Flash Attention is an optimized algorithm for the Attention mechanism that increases its speed several times over.

5. Sparse Attention

Sparse Attention focuses only on important parts instead of computing attention between all tokens, reducing computations.

Using Foundation Models: Fine-tuning vs RAG vs Prompt Engineering

When you want to use a Foundation Model for a specific application, you have three main approaches:

1. Fine-tuning

Additional training of the model on your specialized data. Suitable when:

- You have lots of data (thousands of samples)

- You need very high performance

- You want the model to learn a specific style and behavior

2. RAG (Retrieval-Augmented Generation)

RAG is an approach where the model has access to an external knowledge base and can retrieve information from it. Suitable when:

- Data is regularly updated

- You need document-based responses

- You want to track response sources

3. Prompt Engineering

Precise design of instructions for the model. Suitable when:

- You need results quickly

- You don't have much data for fine-tuning

- You want to work on multiple different tasks

Compare all three methods in Fine-tuning vs RAG vs Prompt Engineering.

Challenges and Limitations of Foundation Models

1. High Computational Cost

Training a Foundation Model can cost millions of dollars. For example, training GPT-3 cost approximately $4.6 million. Using these models also requires powerful hardware.

Solution: Using Small Language Models (SLM) for specific applications, or using AI-specific chips.

2. Hallucination

Sometimes models generate incorrect but convincing information. This AI hallucination is one of the biggest challenges.

Solution: Using RAG to rely on credible sources, or using reasoning models like O3 Mini that think before responding.

3. Bias and Discrimination

Models may reinforce biases present in training data. This is discussed in ethics in AI.

4. Lack of Transparency

These models often act like a "black box" and we don't know exactly how they reached a conclusion. Explainable AI tries to solve this problem.

5. Context Length Limitations

Most models cannot process very long texts. However, newer models like Claude Sonnet 4.5 with larger context windows have reduced this limitation.

6. Security and Privacy

- Prompt Injection: Injecting malicious instructions into model input

- Information leakage: Potential disclosure of sensitive information from training data

- Misuse: Using models for malicious purposes

Solution: Using federated learning to preserve privacy.

7. Language Limitations

Foundation Models typically perform better in widely-used languages like English and are weaker in languages with fewer resources like Persian. Language model limitations explores this topic.

The Future of Foundation Models

1. Self-Improving Models

Self-improving models and Self-Rewarding Models are the next generation that can improve themselves without needing new data.

2. AGI (Artificial General Intelligence)

Foundation Models are an important step toward AGI—intelligence that performs at or beyond human level across all domains. Life after AGI could completely transform the world.

3. World Models

World Models are models that have a complete mental model of the real world and can simulate the future.

4. Multi-Agent Models

Multi-agent systems where multiple Foundation Models collaborate. Frameworks like LangChain, CrewAI, and AutoGen enable this.

5. Physical AI

Physical AI combines Foundation Models with robotics for physical world interaction.

6. Agentic AI

Agentic AI and AI Agents are models that can independently plan, decide, and act.

7. Quantum Computing and AI

Quantum AI could exponentially increase model training and inference speed.

8. Continual Learning

Continual Learning allows models to learn new things without forgetting previous knowledge.

Tools and Frameworks for Working with Foundation Models

Various tools are available for working with Foundation Models:

Deep Learning Frameworks

- TensorFlow: Google's powerful framework

- PyTorch: Most popular framework in research

- Keras: Simple API for beginners

Cloud Platforms

- Google Cloud AI: Google's AI tools

- Azure AI: Microsoft services

- AWS SageMaker: Amazon platform

No-Code Tools

- Google Opal: Building apps without code

- Google AntiGravity: Agentic development environment

Foundation Models and Industry Transformation

Business Transformation

Foundation Models are fundamentally changing how business is conducted:

- Digital marketing: Content generation, customer analysis, ad optimization

- Customer service: 24/7 high-quality responses

- Data analysis: Extracting insights from massive data

- Team management: Improving productivity and collaboration

Technology Transformation

- Smart browsers: Web automation with AI

- App building: Faster development with AI assistance

- Web 4.0: The intelligent web of the future

Society Transformation

- Smart cities: Urban optimization with AI

- Smart home: Easier and safer living

- Smart agriculture: Increasing food production

- Environment: Protecting nature

Conclusion

Foundation Models are undoubtedly one of the most important technological breakthroughs in history. These models have not only revolutionized how we work with artificial intelligence but are fundamentally changing industries, professions, and even how humans interact with technology.

From personalized medicine to self-driving cars, from individual education to digital art, Foundation Models are redefining the boundaries of what's possible.

However, with these amazing advances come challenges like trustworthiness, ethics, employment impact, and privacy that we must carefully address.

The future of AI is moving toward AGI and even ASI (Artificial Superintelligence). Foundation Models are the basis of this exciting journey, and we're only at the beginning.

For those wanting to work in this field, countless opportunities exist—from startup ideas to earning income from AI. The future belongs to those who understand this technology and can use it for humanity's benefit.

✨

With DeepFa, AI is in your hands!!

🚀Welcome to DeepFa, where innovation and AI come together to transform the world of creativity and productivity!

- 🔥 Advanced language models: Leverage powerful models like Dalle, Stable Diffusion, Gemini 2.5 Pro, Claude 4.5, GPT-5, and more to create incredible content that captivates everyone.

- 🔥 Text-to-speech and vice versa: With our advanced technologies, easily convert your texts to speech or generate accurate and professional texts from speech.

- 🔥 Content creation and editing: Use our tools to create stunning texts, images, and videos, and craft content that stays memorable.

- 🔥 Data analysis and enterprise solutions: With our API platform, easily analyze complex data and implement key optimizations for your business.

✨ Enter a new world of possibilities with DeepFa! To explore our advanced services and tools, visit our website and take a step forward:

Explore Our ServicesDeepFa is with you to unleash your creativity to the fullest and elevate productivity to a new level using advanced AI tools. Now is the time to build the future together!