Blogs / Transformer Model: Revolution in Deep Learning and Artificial Intelligence

Transformer Model: Revolution in Deep Learning and Artificial Intelligence

Introduction

The Transformer model is one of the most significant breakthroughs in deep learning introduced by Google researchers in 2017 in the paper "Attention is All You Need." This architecture, using the Attention Mechanism, successfully overcame fundamental limitations of previous models and revolutionized natural language processing, computer vision, and many other fields.

The main difference between Transformers and traditional models like RNN and LSTM lies in how they process sequential data. While older models had to process data sequentially one after another, Transformers can examine all data in parallel. This feature not only multiplies processing speed but also enables learning more complex relationships among data.

Today, virtually all advanced language models we interact with - from ChatGPT to Claude, Gemini, and Llama - are all built on the Transformer architecture. These models have demonstrated performance beyond expectations in diverse tasks from machine translation and text generation to sentiment analysis and question answering.

History and Motivation for Creating Transformers

Before the introduction of Transformers, Recurrent Neural Networks (RNN) and their advanced version LSTM were the standard for processing sequential data. Despite relative successes, these models had serious problems:

Vanishing and Exploding Gradient Problem: In long sentences, information from initial words gradually disappeared during processing. This phenomenon prevented the model from learning long-term dependencies effectively.

No Parallel Processing: Due to the sequential nature of these models, the full power of Graphics Processing Units (GPUs) couldn't be utilized. Each word had to wait for the previous word to be processed.

Slow Training: Training RNN models on large datasets was time-consuming and expensive.

Long-term Memory Limitations: Even LSTM, designed to solve the memory problem, struggled with very long sentences (e.g., several hundred words).

Understanding these limitations, Google researchers sought a solution that could:

- Examine all words in a sentence simultaneously

- Model relationships between distant words effectively

- Leverage parallel processing of GPUs

- Scale to very large models

The result of these efforts was the Transformer architecture, which achieved all these goals solely by relying on the Attention Mechanism.

Attention Mechanism: The Heart of Transformers

The Attention Mechanism is an idea inspired by how human attention works. When you read a sentence, you automatically pay more attention to important words and consider each word's relationship with other words. The attention mechanism does the same for AI models.

How the Attention Mechanism Works

Suppose we have the sentence "The cat sat on the roof." When the model wants to understand the meaning of "sat," it needs to know who sat and where. The attention mechanism finds these relationships as follows:

For each word, three vectors are computed:

- Query: Represents "what is this word looking for?"

- Key: Represents "what information does this word provide?"

- Value: The actual information this word carries

Then, the model calculates the relationship strength between each pair of words by computing the similarity between one word's Query and other words' Keys. This relationship strength is called the Attention Score.

For example, when the model examines the word "sat":

- It has a strong relationship with "cat" (because it's the subject)

- Medium relationship with "roof" (because it's the location)

- Weak relationship with "on" (because it's a helping preposition)

Finally, the new representation of the word "sat" is obtained from the weighted combination of all words' Values, where the weights are the attention scores. This process allows the model to have a deeper understanding of each word's meaning in the sentence context.

Multi-Head Attention

One of the key innovations of Transformers is using Multi-Head Attention. Instead of having just one type of attention, Transformers use multiple parallel attention mechanisms (typically 8 or 16 heads), each learning a different aspect of relationships.

For example, in the sentence "The girl who was in the park gave the ball to the boy":

- The first head might focus on grammatical relationships (subject-verb-object)

- The second head on semantic relationships (girl-ball-boy)

- The third head on conjunctions and prepositions

- The fourth head on temporal and positional relationships

This diversity in viewing text allows the model to have a more comprehensive understanding of the sentence and learn more complex patterns.

Self-Attention vs. Cross-Attention

Two main types of attention mechanisms exist in Transformers:

Self-Attention: Each word attends to other words in the same sentence. This type of attention helps the model understand internal text relationships.

Cross-Attention: In tasks like machine translation, target language words attend to source language words. This allows the model to access the entire input sentence when generating each word.

Complete Transformer Architecture

The original Transformer architecture consists of two main parts: Encoder and Decoder. However, in modern models, only one of these might be used.

Encoder

The encoder is responsible for understanding and analyzing the input. Each encoder layer consists of two main parts:

1. Self-Attention Layer: This layer allows each word to attend to all words in the sentence and understand their relationships.

2. Feed-Forward Network: After attention is applied, a simple neural network (two layers with ReLU or GELU activation) is applied to each word independently. This network learns more complex patterns.

Between these layers, two important techniques are used:

- Residual Connections: Each layer's output is added to its input to prevent gradient vanishing

- Layer Normalization: Data normalization for training stability

Typically, the encoder consists of 6 to 24 stacked layers. Each layer creates a deeper understanding of the text.

Decoder

The decoder is responsible for generating output. Each decoder layer includes three parts:

1. Masked Self-Attention: Similar to encoder's Self-Attention, but with the difference that each word can only attend to words before it, not after. This prevents "seeing the future" during text generation.

2. Cross-Attention: This layer allows the decoder to attend to the encoder's output. For example, in translation, when generating each Persian word, it has access to all English input words.

3. Feed-Forward Network: Similar to the encoder.

Encoder-Only, Decoder-Only, and Encoder-Decoder Models

Over time, researchers found that depending on the application, only part of the Transformer could be used:

Encoder-Only Models (like BERT): For tasks requiring deep text understanding, such as classification, named entity recognition, and question answering. These models can attend to the entire sentence bidirectionally.

Decoder-Only Models (like GPT): For text generation. These models can only attend to previous words and are optimized for sequential generation. Most large language models today are of this type.

Encoder-Decoder Models (like T5): For tasks requiring input-to-output transformation, such as machine translation and text summarization.

Positional Encoding: How Does the Transformer Understand Word Order?

One of the main challenges of Transformers is that unlike RNN, they have no information about word order. When all words are processed in parallel, the model doesn't know how "The cat saw the dog" differs from "The dog saw the cat."

To solve this problem, Positional Encoding is used. This technique adds a special vector to each word specifying its position in the sentence. These vectors are created using sine and cosine functions:

PE(pos, 2i) = sin(pos / 10000^(2i/d))

PE(pos, 2i+1) = cos(pos / 10000^(2i/d))Where pos is the word position, i is the dimension index, and d is the total embedding dimension.

Why use sinusoidal functions? Because these functions have interesting properties:

- They produce a unique vector for each position

- The model can easily learn the relative distance between two positions

- They work for sentences longer than those seen during training

In newer models, sometimes Learned Positional Embeddings are used, which are learned during training instead of using a fixed formula.

Real-World Applications of Transformers

Natural Language Processing

Machine Translation: Transformers have dramatically improved translation quality. Modern translation services like Google Translate use Transformers and can transfer not just word-by-word, but meaning and sentence context as well.

Creative Text Generation: Models like GPT can generate stories, poems, articles, and even programming code. These models, by learning from billions of pages of text, have understood complex language patterns.

Automatic Summarization: Transformers can read long texts and generate useful, coherent summaries. This application is very useful in news analysis, scientific research, and information management.

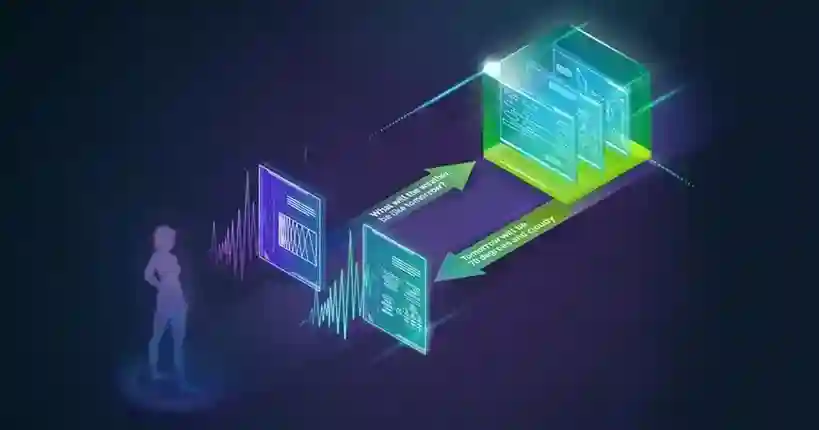

Question Answering: Modern Q&A systems can read a long document and answer specific questions about it. This capability is applicable in search engines, personal assistants, and educational systems.

Sentiment Analysis: Transformers can detect the sentiment of a text's author (positive, negative, neutral). This is very practical in customer review analysis, social media monitoring, and market research.

Computer Vision

Vision Transformer (ViT): In 2020, researchers showed that Transformers could be directly applied to images. An image is divided into small patches (e.g., 16×16 pixels) and each patch is treated like a "word" in a sentence. ViT achieved performance equal to or better than Convolutional Neural Networks (CNN) in image classification.

Object Detection and Segmentation: Transformers can identify different objects in an image and mark their boundaries. This is applicable in autonomous vehicles, medical diagnosis, and security.

Text-to-Image Generation: Models like DALL-E use Transformers to generate images from text descriptions. You can say "an astronaut cat painting on Mars" and the model creates an image matching your description.

Audio Processing

Speech Recognition: Transformers play a key role in modern speech recognition systems like Whisper. These systems can convert speech to text with high accuracy, even in the presence of noise or different accents.

Speech Synthesis: Transformers can convert text to natural, human-like speech. Modern systems can even place correct tone, emotion, and emphasis in speech.

Speech Translation: Combining speech recognition, translation, and speech synthesis for live conversation translation.

Time Series Analysis

Transformers have also been applied in time series forecasting:

Financial Forecasting: Analyzing trends in stock prices, currencies, and commodities using historical data.

Demand Forecasting: Predicting product demand for inventory and supply chain management.

Weather Forecasting: Using historical weather data for more accurate future condition predictions.

Health Analysis: Monitoring patients' vital signs and predicting disease progression.

Biology and Medicine

Protein Structure Prediction: AlphaFold 2 using Transformers solved one of biology's biggest problems: predicting the 3D structure of proteins from their amino acid sequence.

Drug Discovery: Transformers can design new drug molecules or predict the effects of existing drugs on various diseases.

Genomics Analysis: Understanding DNA and RNA sequences and predicting gene functions.

Disease Diagnosis: Analyzing medical images (radiology, pathology) to assist in more accurate disease diagnosis.

Key Advantages of Transformers

Parallel Processing

The biggest advantage of Transformers over RNN and LSTM is parallel processing capability. In a 100-word sentence:

- RNN must perform 100 sequential processing steps

- Transformer can process all 100 words simultaneously

This results in:

- Training speed 10 to 100 times faster

- Full utilization of GPU and TPU power

- Ability to train much larger models

Long-term Dependency Management

In RNN and LSTM, information from the first word of a sentence weakened after passing through dozens of words. But in Transformers, each word can directly attend to any other word, even if they are 1000 words apart. This means:

- Better understanding of complex sentences

- Context preservation in long texts

- Identifying distant relationships

Scalability

Transformers have an interesting property: the larger they get, the better they perform. This phenomenon is known as Scaling Laws:

- GPT-2 with 1.5 billion parameters

- GPT-3 with 175 billion parameters

- New models with trillions of parameters

With increased model size, training data, and computational power, performance improves predictably.

Architectural Flexibility

Transformer is a general architecture that can be adapted for different tasks:

- Changing the number of layers

- Changing the number of attention heads

- Adding specialized layers

- Combining with other architectures

This flexibility has made Transformers applicable in very diverse fields from text to images, audio, and even games.

Transfer Learning

One of the most powerful features of Transformers is Transfer Learning capability. This means:

- First, a large model is pre-trained on huge amounts of general data

- Then, the same model is fine-tuned with a small amount of specific data for a particular task

For example, GPT-3 is trained on billions of pages of text from the internet. Now you can fine-tune it for a specific task (e.g., analyzing your company's customer reviews) with just a few hundred or thousand examples. This is while if you want to build a model from scratch, you need millions of examples.

Transformer Challenges and Solutions

Quadratic Computational Complexity

The biggest challenge of traditional Transformers is O(n²) computational complexity in the attention mechanism. This means if sentence length doubles, computation time quadruples.

For a 1000-word sentence, the model must perform 1,000,000 attention calculations. For a 10,000-word sentence, this number reaches 100,000,000, which is practically impossible.

Solutions:

Sparse Attention: Instead of each word attending to all other words, it only attends to a subset. For example:

- Sliding Window: Each word only attends to 256 words before and after it

- Global Tokens: A few special tokens that have access to everything

- Random Attention: Random attention to preserve information flow

FlashAttention: This algorithm increases attention computation speed 2-4 times through intelligent GPU memory management without changing results.

Linear Transformers: Architectures that reduce complexity from O(n²) to O(n). For example:

- Linformer: Using projection for dimension reduction

- Performer: Using kernel approximation

- RWKV: Combining RNN and Transformer advantages

Mixture of Depths (MoD): In each layer, only some tokens are fully processed while others pass through a shortcut. This creates a 50% reduction in computation without quality loss.

High Memory Consumption

Large Transformer models require significant memory:

- GPT-3 (175B parameters): About 350 gigabytes to store weights

- During inference: Need to store KV Cache for all previous tokens

Solutions:

Quantization: Reducing number precision from 32-bit to 8-bit or even 4-bit. This can reduce required memory 4 to 8 times with very little quality loss.

Grouped-Query Attention (GQA): Instead of having separate key and value for each attention head, several heads share a common KV. This reduces KV Cache memory 8 times.

Small Language Models (SLM): Models with 1-7 billion parameters optimized for personal devices that can run on laptops or mobile phones.

Knowledge Distillation: Training a small model (Student) using a large model's (Teacher) output. The small model can be 10 times smaller but maintain 95% of the large model's performance.

Hallucination

One of the serious problems of Transformer models is generating incorrect information with high confidence. The model might confidently say "The capital of France is Berlin" or provide completely wrong historical information.

Causes of hallucination:

- Learning statistical patterns instead of real-world facts

- Pressure to generate responses even with uncertainty

- Interference and contradictions in training data

- Tendency to complete patterns even with insufficient information

Solutions:

Retrieval-Augmented Generation (RAG): Before generating a response, the model first searches for relevant information from reliable databases and then responds based on it. This method significantly increases accuracy.

Chain of Thought (CoT): Force the model to reason step-by-step. When the model must explain its thinking process, the probability of error decreases.

Training with Human Feedback (RLHF): Using human feedback to train the model to generate more accurate and useful responses.

Confidence Scoring: Adding capability for the model to say "I don't know" or declare its confidence level in the response.

Bias and Fairness

Transformers are trained on internet data that itself contains human biases:

- Gender biases (e.g., nurse = woman, engineer = man)

- Racial and cultural biases

- Socio-economic biases

- Language biases (excessive focus on English)

Solutions:

- More diversity in training data

- Filtering and careful review of data before training

- Regular tests to identify biases

- Using de-biasing techniques during training

High Computational Cost

Training large Transformer models is very expensive:

- GPT-3: Estimated $4.6 million for one training run

- Energy consumption equivalent to several homes in a year

- Need for thousands of GPUs for months

Solutions:

- Mixture of Experts (MoE): Instead of activating all parameters, only part of the network is activated for each input. A model with 1.7 trillion parameters but computational cost of a 12 billion parameter model.

- Progressive Training: Starting with a small model and gradually expanding it instead of starting directly with a large model.

- Low-Rank Adaptation (LoRA): Instead of fine-tuning all parameters, only small matrices are trained. This reduces fine-tuning cost 100 times.

- Open-source models: Reusing pre-trained models instead of training from scratch.

Recent Developments in Transformers

Hybrid Models

Researchers have found that combining Transformers with other architectures can yield better results:

Mamba: A new architecture based on State Space Models with linear complexity that maintains Transformer performance. Mamba can process million-length sequences.

Jamba: Combining Transformer and Mamba layers in a single model. Best of both worlds: Transformer's complex modeling ability + Mamba's efficiency.

RWKV: Combining RNN features (linear complexity) with Transformer power. Can infer like RNN (fast) but train like Transformer (parallel).

RetNet (Retentive Networks): Replacing attention mechanism with "retention" mechanism that has both parallel training and fast inference.

Multimodal Models

The new generation of Transformers can process multiple data types simultaneously:

Gemini: Google's model that natively understands text, images, audio, and video. You can show an image and ask questions about it or give a video and ask for a summary.

GPT-4 Vision: Ability to understand images and explain them, answer visual questions, and even read text from images.

CLIP: OpenAI's model that can understand the relationship between images and texts. The foundation of many image generation models.

These models, by learning shared representations for different data types, can perform more complex tasks requiring multi-sensory understanding.

Reasoning Models

A new generation of Transformer models focused on deep reasoning:

o1 and o3-mini: OpenAI models that spend a lot of time "thinking" before giving answers. They can solve complex mathematical problems, programming, and logical reasoning.

o4-mini: Smaller, faster version for efficient reasoning.

These models, using Chain of Thought technique and Reinforcement Learning, have learned to solve problems step-by-step and verify their answers.

Efficient Transformers for Edge

Edge AI: Moving toward running models on personal devices instead of cloud servers. Advantages:

- Privacy preservation (data doesn't leave the device)

- Reduced latency (no need to communicate with server)

- Reduced cloud costs

- Offline functionality

Models like Phi-3, Gemma, and Llama 3.2 are optimized to run on smartphones and laptops.

Specialized Transformers

Code Models: Models like Codex, Code Llama, and DeepSeek Coder specifically for understanding and generating programming code. They can:

- Generate code from natural descriptions

- Find bugs

- Explain code

- Optimize code

Scientific Models: Models trained on scientific papers, laboratory data, and specialized knowledge to assist scientific research.

Legal and Medical Models: Models with specialized knowledge in specific fields that can help professionals.

The Future of Transformers

Path to AGI

Many researchers believe that scaled Transformers are one of the main paths to Artificial General Intelligence (AGI). AGI means a system that can perform any mental task a human can.

Remaining challenges:

- Causal Reasoning: Understanding cause and effect relationships, not just correlations

- Multi-step Learning: Solving problems requiring long-term planning

- True Generalization: Transferring knowledge to completely new situations

- Physical World Understanding: Understanding physics laws and interacting with the real world

World Models

An important trend is developing World Models - models that try to have a mental simulation of the world:

- Understanding physics (if I drop something, it falls)

- Understanding social relationships (if I insult someone, they get upset)

- Predicting the future (if I do this, what will happen?)

These models can get closer to AGI because they "understand" the world, not just learn statistical patterns.

Continual Learning

Current models are fixed after training. Future models should be able to:

- Learn from interactions with users

- Learn new knowledge without forgetting old knowledge

- Optimize themselves

Neuromorphic Transformers

Neuromorphic Computing: Designing specialized chips that mimic brain neuron behavior. These chips can:

- Reduce energy consumption 1000 times

- Increase inference speed

- Enable execution on IoT and edge devices

Combination with Other Technologies

Transformer + Blockchain: Using blockchain to validate AI outputs and manage data ownership rights.

Transformer + Quantum Computing: Using quantum computer power for faster training and solving complex optimization problems.

Transformer + Robotics: Using Transformers for intelligent robot control and physical world interaction.

Tools and Resources for Working with Transformers

Deep Learning Frameworks

PyTorch: The most popular framework for Transformer model research and development. Flexible, easy to debug, and with a large community.

TensorFlow: Google's powerful framework with excellent tools for production and deployment. TensorFlow Lite for mobile devices.

Keras: High-level API that simplifies working with deep models. Now part of TensorFlow.

JAX: New framework for high-speed numerical computing with automatic differentiation capability.

Specialized Transformer Libraries

Hugging Face Transformers: The most powerful and complete library for working with Transformers. Access to thousands of pre-trained models, easy training and inference tools.

Sentence Transformers: Specialized for generating sentence embeddings and semantic search applications.

Fairseq: Meta's library for NLP research and machine translation.

T5X: Google's efficient implementation of the T5 model.

Training Environments

Google Colab: Free Jupyter Notebook environment with GPU access. Great for experimentation and learning.

Kaggle Notebooks: Similar to Colab with ready datasets and ML competitions.

Paperspace Gradient: Cloud environment for training large models with powerful GPUs.

AWS SageMaker, Google Vertex AI, Azure ML: Enterprise platforms for training and deploying large models.

Datasets

Common Crawl: Billions of web pages for pre-training language models.

The Pile: Diverse 800GB dataset for training language models.

ImageNet: Gold standard for image classification.

GLUE and SuperGLUE: Standard benchmarks for evaluating NLP models.

Ethical and Social Considerations

Privacy

Large Transformer models are trained on public internet data that may contain personal information. This creates challenges:

- The model might memorize and reproduce private information

- Possibility of extracting information from trained models

- Conflict between data needed for training and individual privacy

Solutions include federated learning, removing sensitive information from training data, and limiting the model's ability to memorize specific information.

Environmental Impact

Training large models has significant energy consumption:

- GPT-3 training produced equivalent to 552 tons of carbon dioxide (equivalent to 120 cars in a year)

- Large data centers are major consumers of water and electricity

Solutions:

- Using renewable energy

- Optimizing algorithms to reduce computation

- Reusing pre-trained models

- Developing more efficient models

Impact on Employment

Transformers can automate many tasks:

- Translation, writing, basic programming

- Customer support, data analysis

- Content generation, graphic design

These changes create challenges and opportunities:

- Need for workforce retraining

- Creating new jobs in AI field

- Changing the nature of human work

Malicious Use

Transformers can be used for harmful purposes:

- Generating fake news and disinformation

- Sophisticated phishing and fraud

- Generating harmful or illegal content

- Manipulating public opinion

Solutions include content filters, abuse detection systems, and appropriate laws and regulations.

Transparency and Interpretability

Large Transformer models are like "black boxes" - we know the output but don't know why it gave this answer. This is problematic in some applications (e.g., medical diagnosis or legal decisions).

Efforts to improve interpretability:

- Visualizing attention patterns to see what parts of input the model attended to

- Analyzing different layers to understand what information each layer learned

- Developing Explainable AI (XAI) methods

Access and Digital Divide

Advanced Transformer models require enormous resources that only large tech companies can access. This creates gaps:

- Academic researchers and developing countries have limited access

- Power concentration in a few companies

- Lack of diversity in technology development

Solutions include developing open-source models, sharing computational resources, and government investment in research infrastructure.

Industrial and Commercial Applications

Financial Sector

Financial Analysis and Forecasting: Transformers can analyze financial reports, economic news, and market data to predict future trends.

Algorithmic Trading: Using Transformers to analyze market sentiment, detect patterns, and make automated trading decisions.

Fraud Detection: Identifying suspicious patterns in transactions with high accuracy.

Credit Risk Assessment: Analyzing customer data to predict loan repayment probability.

Healthcare Sector

Diagnosis and Treatment: Assisting doctors in diagnosing diseases from symptoms, medical images, and patient history.

Drug Discovery: Accelerating new drug development by predicting different molecule effects.

Personalized Medicine: Analyzing genetics and medical history to provide customized treatments.

Telemedicine: Medical chatbots for initial consultation and patient follow-up.

Education

Personalized Learning: Systems that provide appropriate content by understanding each student's knowledge level and learning style.

Virtual Teacher: Answering student questions, explaining complex concepts, and providing immediate feedback.

Educational Content Generation: Creating exercises, tests, and educational materials aligned with curriculum.

Translation and Access: Automatically translating educational content into different languages for global access.

Marketing and Sales

Content Creation: Writing product descriptions, social media posts, marketing emails, and blog articles.

Smart SEO: Optimizing content for search engines by analyzing keywords and competitors.

Personalization: Providing customized recommendations and content to each user based on their behavior and interests.

Sales Chatbots: Automatically answering customer questions and guiding through the purchase process.

Customer Service

Advanced Chatbots: Answering common questions, solving simple problems, and directing to appropriate resources.

Sentiment Analysis: Identifying dissatisfied customers and prioritizing requests based on urgency.

Multilingual Support: Providing services to customers in their native language with automatic translation.

Call Summarization: Automatically generating conversation summaries for better follow-up.

Media and Entertainment

Subtitle Generation: Automatically creating subtitles for videos and films.

Content Recommendation: Suggesting movies, music, or articles based on user interests.

Music and Audio Generation: Creating music, sound effects, and voiceovers.

Scriptwriting: Helping screenwriters with ideation and story development.

Legal and Judicial

Legal Research: Searching and analyzing legal cases, laws, and related regulations.

Contract Drafting: Automatically generating standard contract drafts.

Outcome Prediction: Analyzing similar cases to predict success probability in court.

Document Summarization: Summarizing long legal documents for faster review.

Human Resources

Smart Recruitment: Screening resumes, matching candidates with job positions, and conducting initial interviews.

Organizational Culture Analysis: Reviewing employee surveys and identifying issues.

Training Programs: Creating customized training content for employees.

Performance Evaluation: Analyzing data and providing constructive feedback.

Practical Tips for Starting with Transformers

For Beginners

1. Start with Pre-trained Models: Instead of training from scratch, use ready-made models from Hugging Face. Models like BERT, GPT-2, or T5 are excellent for learning.

2. Use Google Colab: For practice and experimentation, use Google Colab which provides free GPU.

3. Learn Basic Concepts: Before implementation, understand fundamental concepts like attention, embedding, and tokenization well.

4. Start with Simple Tasks: First try simple tasks like text classification or sentiment analysis.

For Professionals

1. Effective Fine-tuning: Use techniques like LoRA for efficient fine-tuning.

2. Production Optimization: Use techniques like quantization, pruning, and knowledge distillation to reduce size and increase speed.

3. Long Sequence Management: For working with long texts, use sparse attention techniques or RAG.

4. Monitoring and Evaluation: Build quality monitoring systems to identify hallucination and other issues.

Learning Resources

Online Courses:

- CS224N (Stanford): Natural Language Processing with Deep Learning

- Fast.ai: Practical Deep Learning Course

- Hugging Face Course: Learning to Work with Transformers

Books:

- "Natural Language Processing with Transformers" by Hugging Face

- "Attention Is All You Need" - Original Transformer Paper

- "Deep Learning" by Ian Goodfellow

Online Communities:

- Hugging Face Forums

- Reddit r/MachineLearning

- Discord and Slack AI Channels

Conclusion

In less than a decade, the Transformer model has transformed from a research idea into the foundation of modern artificial intelligence. This architecture, using the Attention Mechanism, has overcome fundamental limitations of previous models and enabled the development of intelligent systems that previously seemed impossible.

From natural language processing and computer vision to drug discovery and financial forecasting, Transformers have found diverse applications that have transformed daily life, industry, and science. Large language models like GPT, Claude, and Gemini that we interact with today are all built on this architecture.

However, Transformers face significant challenges: high computational complexity, considerable energy consumption, hallucination, biases, and ethical issues. Ongoing research focuses on solving these problems - from developing more efficient architectures like Mamba and RWKV to techniques like RAG and Chain of Thought for increasing accuracy.

The future of Transformers looks bright. With continuous advances in architecture, training algorithms, and hardware, these models are expected to become more powerful, efficient, and accessible. The path toward Artificial General Intelligence (AGI), development of World Models, and combination with emerging technologies like quantum computing and neuromorphic computing all promise an exciting future.

Ultimately, the Transformer is not just a technical architecture but represents a paradigm shift in how we design and build intelligent systems - moving from manual rules and designed features toward learning patterns from data and using attention to understand complex relationships. This paradigm shift will have a profound and lasting impact on the future of artificial intelligence and the future of human work and life.

✨

With DeepFa, AI is in your hands!!

🚀Welcome to DeepFa, where innovation and AI come together to transform the world of creativity and productivity!

- 🔥 Advanced language models: Leverage powerful models like Dalle, Stable Diffusion, Gemini 2.5 Pro, Claude 4.5, GPT-5, and more to create incredible content that captivates everyone.

- 🔥 Text-to-speech and vice versa: With our advanced technologies, easily convert your texts to speech or generate accurate and professional texts from speech.

- 🔥 Content creation and editing: Use our tools to create stunning texts, images, and videos, and craft content that stays memorable.

- 🔥 Data analysis and enterprise solutions: With our API platform, easily analyze complex data and implement key optimizations for your business.

✨ Enter a new world of possibilities with DeepFa! To explore our advanced services and tools, visit our website and take a step forward:

Explore Our ServicesDeepFa is with you to unleash your creativity to the fullest and elevate productivity to a new level using advanced AI tools. Now is the time to build the future together!