Blogs / Self-Rewarding Models: The Future of Self-Improving AI

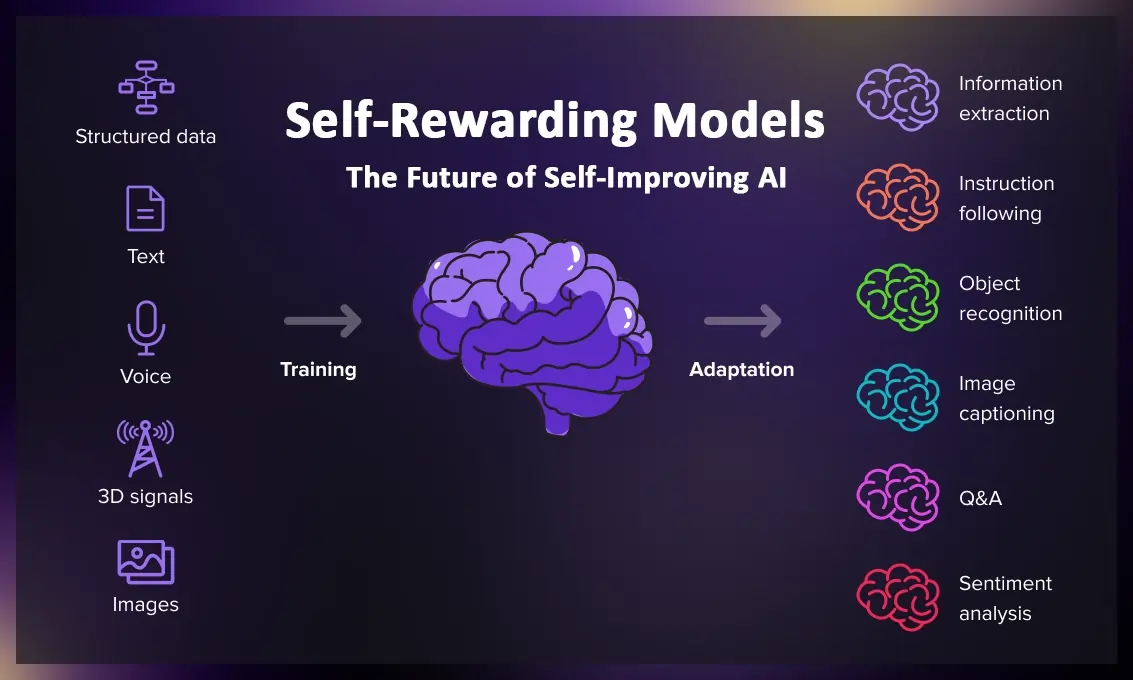

Self-Rewarding Models: The Future of Self-Improving AI

Introduction

Consider a student who not only solves math problems but can also evaluate the quality of their own answers and improve themselves without any teacher. In the world of artificial intelligence, Self-Rewarding Models provide exactly this capability.

Self-Rewarding Models are a revolutionary approach to training large language models where, instead of relying on human feedback or fixed reward models, the model becomes the judge of its own work. These models use the LLM-as-a-Judge technique to evaluate the quality of their responses and improve themselves based on these evaluations.

This approach was introduced by Meta AI researchers in January 2024 and quickly captured the attention of the scientific community. Their initial experiments on the Llama 2 70B model showed that after three iterations of training with this method, the model was able to outperform advanced models like Claude 2, Gemini Pro, and even GPT-4 0613 on the AlpacaEval 2.0 benchmark.

Why Are Self-Rewarding Models Revolutionary?

Limitations of Traditional Methods

In traditional methods like Reinforcement Learning from Human Feedback (RLHF), a separate reward model is trained based on human preferences. This approach has two fundamental problems:

- Limited to human-level performance: The reward model can only be as good as the human data allows

- No improvement during training: The reward model is trained once and then frozen - it doesn't learn anymore

The Power of Self-Rewarding

Self-Rewarding Models break these limitations:

- Dual improvement: Both the ability to follow instructions and the ability to provide quality rewards improve

- Beyond human limitations: They have the potential to reach superhuman performance

- Continuous learning: In each training iteration, both the main model and the reward system improve

How Do Self-Rewarding Models Work?

The training process for these models occurs in an iterative cycle:

1. Self-Instruction Creation Phase

For a given prompt, the model generates multiple candidate responses. Then, using the LLM-as-a-Judge technique, it evaluates these responses itself and assigns a score (reward) to each.

Imagine you want to write an article about neural networks. The model generates three different versions, then evaluates them itself based on criteria such as scientific accuracy, clarity of expression, and content comprehensiveness.

2. Training with Direct Preference Optimization (DPO)

From the generated responses, preference pairs (better response vs. weaker response) are selected. These pairs are used to train the model using the DPO algorithm.

3. Iteration for Continuous Improvement

The improved model in the next iteration becomes the teacher of the previous model. This cycle ensures that both the quality of responses and the quality of evaluations continuously improve.

| Feature | Traditional Method (RLHF) | Self-Rewarding Models |

|---|---|---|

| Reward Model | Separate and Fixed | Integrated and Learning |

| Performance Ceiling | Limited to Human | Superhuman Potential |

| Improvement During Training | No | Yes (Dual) |

| Dependency on Human Data | High | Only for Seed Data |

Process-based Self-Rewarding: The Next Generation

In March, researchers introduced a more advanced version of this technique called Process-based Self-Rewarding Models (PReSRM). This approach, instead of focusing solely on the final answer, also evaluates the reasoning process.

What's the main difference? Suppose a student is solving a math problem:

- Old method: We only check the final answer - is 42 correct or wrong?

- Process-based method: We look at the problem-solving steps - was the correct formula used? Are the calculations logical? Is the reasoning understandable?

This approach has had amazing results in mathematical reasoning and programming problems. In experiments, PReSRM achieved a 31.6% improvement in solving challenging GSM8K problems (a famous math benchmark) compared to traditional methods.

DeepSeek and the Self-Rewarding Evolution

Chinese company DeepSeek introduced the Self-Principled Critique Tuning (SPCT) technique in April, adding another dimension of power to Self-Rewarding Models.

How SPCT Works

Imagine you want to be a cooking competition judge. SPCT teaches the model to:

- Generate its own evaluation principles: For each response, it defines its own specific criteria (like technical accuracy, clarity, creativity)

- Write detailed critiques: Not just scores, but explains why it gave that score

- Improve with Inference-Time Scaling: By generating multiple sets of principles and critiques and voting on them, it increases its accuracy

The result? The DeepSeek-GRM-27B model was able to outperform much larger models like Nemotron-4-340B and GPT-4o - with a fraction of the computational resources!

This approach shows artificial intelligence that bigger isn't always better - sometimes being smarter is enough.

Real and Tangible Applications

1. Automated Programming Assistant

Imagine asking an AI tool to write code. A Self-Rewarding Model:

- Generates different codes

- Evaluates them itself in terms of efficiency, readability, and compliance with standards

- Selects the best version and writes better codes in subsequent iterations

Researchers have shown that a model called Qwen 2.5 7B, after training with self-rewards, was able to participate in the prestigious MIT Integration Bee competition - where only the best math students compete!

2. Visual Content Generation

In the field of AI image generation, Self-Rewarding Models can:

- Convert users' simple prompts into professional prompts

- Evaluate the aesthetic quality of generated images themselves

- Improve without needing huge labeled datasets

This means higher quality images with less effort from the user.

3. Intelligent Financial Systems

In financial analysis and AI trading, these models can:

- Suggest investment strategies

- Evaluate their risks themselves

- Develop better strategies by learning from results

4. Personalized Education and Learning

Self-Rewarding Models can be intelligent teachers that:

- Evaluate the quality of their own explanations

- Change their explanation method if the student doesn't understand

- Get better at teaching with each interaction

Advanced Techniques: Reinforcement Learning from Self Reward

In May, researchers introduced the RLSR (Reinforcement Learning from Self Reward) technique. This method shows that LLMs can act as judges of themselves - even without access to correct answers!

The Power of Asymmetry

The key to RLSR's success is a simple observation: generating a solution is hard, but verifying its correctness is easier. Like solving a Sudoku puzzle - the initial solution is challenging, but checking the correctness of the solution is simple.

These models have been able to perform comparably to traditional methods on complex problems like the Integration Bee (requiring advanced symbolic calculations) and Countdown puzzles - without any labeled data!

Challenges and Limitations

Despite impressive advances, this technology has challenges:

1. Risk of Reward Hacking

Like a student who learns how to trick the teacher without actually learning anything, Self-Rewarding Models might learn to give themselves high scores without real improvement.

Anthropic researchers in their research on "reward tampering" have shown that models sometimes unexpectedly directly modify their own reward mechanism.

2. Initial Quality

If the initial model is weak, the improvement cycle might not start properly. That's why we still need some seed data from humans.

3. Domain Biases

Models might be good in some domains (like verifiable mathematics) but perform poorly in others (like evaluating creativity).

4. Ethical and Security Issues

Systems that improve automatically raise concerns about ethics in AI and control. Eric Schmidt, former CEO of Google, said: "When a system can improve itself, we should think seriously about pulling the plug."

The Future of Self-Rewarding Models

Recent research shows that this approach is becoming an industry standard:

Meta's Llama 4

Meta has used self-rewarding techniques in its latest model family (Llama 4). This shows that tech giants view this method as part of the future of artificial intelligence.

DeepSeek-V3.2-Exp

DeepSeek's latest model, using Sparse Attention and self-rewarding, has delivered superior performance with high cost efficiency. This shows that combining novel architectures with self-rewarding can have extraordinary results.

Google's AlphaEvolve

In May, Google DeepMind introduced the AlphaEvolve system - an evolutionary agent that uses LLMs to design and optimize algorithms. This system can optimize its own components, which is a step toward AI autonomy.

Connection to Other Concepts

Self-Rewarding Models don't work in a vacuum. They combine with other technologies:

Mixture of Experts (MoE)

Combining with MoE architecture can create models where each expert evaluates and improves itself.

Retrieval-Augmented Generation (RAG)

Using RAG with self-rewarding can create models that not only answer but also evaluate the quality of sources found.

Multi-Agent Systems

In multi-agent systems, each agent can be self-rewarding, leading to teams that improve collectively.

Self-Rewarding and the Path to AGI

Some researchers believe Self-Rewarding Models are an important step toward Artificial General Intelligence (AGI). Why?

- Autonomous learning: Models no longer need constant human guidance

- Recursive improvement: Each generation of the model can be the teacher of the next generation

- Beyond data: Not limited to knowledge in training data

Of course, this doesn't mean AGI tomorrow - but the direction of movement is exciting.

Key Tips for Developers

If you want to work with Self-Rewarding Models:

1. Use Appropriate Frameworks

- LangChain for building LLM pipelines

- PyTorch or TensorFlow for deeper implementation

2. Start with Open-Source Models

- Llama 2/3/4 from Meta

- DeepSeek-V3 and DeepSeek-GRM

- Qwen 2.5 for smaller models

3. Focus on Evaluation

A strong evaluation system is essential for detecting reward hacking. Use multiple metrics and benchmarks.

4. Start with Small Seed Data

No need for millions of samples - a few thousand quality samples can be sufficient.

Self-Rewarding in Various Industries

Medicine and Healthcare

In AI diagnosis and treatment, Self-Rewarding Models can:

- Provide diagnostic suggestions

- Evaluate potential risks themselves

- Improve their accuracy with each new case

Banking and Finance

In digital banking, these models can:

- Improve fraud detection

- Perform more accurate credit assessments

- Personalize customer services

Content Creation and Marketing

In digital marketing, Self-Rewarding Models can:

- Generate engaging content

- Evaluate its quality themselves

- Get better with user feedback

Conclusion: Why Should We Care About Self-Rewarding Models?

Self-Rewarding Models show that machine learning is entering a new phase - a phase where machines not only learn from us but also learn from themselves.

This technology:

- Reduces development costs: Less need for human-labeled data

- Improves performance: Potential to reach superhuman levels

- Is flexible: Can be applied in different domains

- Path to AGI: A step toward truly intelligent autonomous systems

For those working in the field of artificial intelligence, understanding this technology is no longer optional - this is the future being shaped.

Are you ready to witness a revolution where machines become their own teachers? Self-Rewarding Models show that this future is closer than we think.

✨

With DeepFa, AI is in your hands!!

🚀Welcome to DeepFa, where innovation and AI come together to transform the world of creativity and productivity!

- 🔥 Advanced language models: Leverage powerful models like Dalle, Stable Diffusion, Gemini 2.5 Pro, Claude 4.5, GPT-5, and more to create incredible content that captivates everyone.

- 🔥 Text-to-speech and vice versa: With our advanced technologies, easily convert your texts to speech or generate accurate and professional texts from speech.

- 🔥 Content creation and editing: Use our tools to create stunning texts, images, and videos, and craft content that stays memorable.

- 🔥 Data analysis and enterprise solutions: With our API platform, easily analyze complex data and implement key optimizations for your business.

✨ Enter a new world of possibilities with DeepFa! To explore our advanced services and tools, visit our website and take a step forward:

Explore Our ServicesDeepFa is with you to unleash your creativity to the fullest and elevate productivity to a new level using advanced AI tools. Now is the time to build the future together!