Blogs / Edge AI and Edge Computing: Revolution in Local AI Processing

Edge AI and Edge Computing: Revolution in Local AI Processing

Introduction

Edge AI or artificial intelligence at the edge is a concept that is steering the technology world towards a different future. While until recently, artificial intelligence processing was primarily performed in centralized data centers and public clouds, today we are witnessing the movement of these capabilities towards network edges and local devices. This paradigm shift not only increases processing speed but also strengthens data security and reduces dependence on internet connectivity.

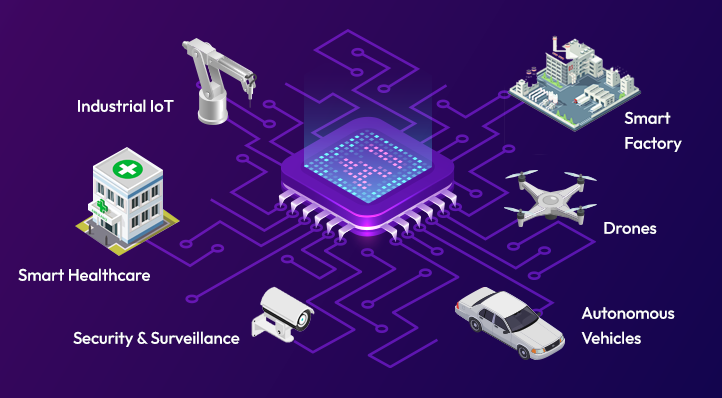

Edge computing combined with artificial intelligence provides solutions that enable instant decision-making and data processing at the point of their generation. This approach has extensive applications ranging from autonomous vehicles to intelligent security systems and medical devices.

Definition and Key Concepts of Edge AI

What is Edge AI?

Edge AI means running machine learning algorithms and neural networks on devices located at the network edge. These devices can include smartphones, security cameras, IoT sensors, vehicles, and even medical devices.

Edge Computing

Edge computing moves data processing from centralized data centers to the closest point to the data source. This approach minimizes latency and reduces bandwidth consumption.

Key Advantages of Edge AI

1. Low Latency

One of the most important advantages of Edge AI is the significant reduction in response time. While sending data to a centralized server and receiving results may take hundreds of milliseconds, Edge AI reduces this time to less than 10 milliseconds.

2. Privacy Protection

By processing data locally, sensitive information does not need to be sent to external servers. This ensures data security and addresses privacy concerns.

3. Independence from Internet Connection

Edge AI enables independent operation from internet connectivity. Even in case of connection loss, devices can continue operating and making decisions.

4. Reduced Bandwidth Costs

With local data processing, the need to transfer large volumes of information to data centers is reduced, resulting in lower bandwidth-related costs.

Underlying Technologies of Edge AI

Specialized Processors

1. Neural Processing Units (NPUs)

These processors are specifically designed to execute neural network operations. Companies like Intel, Qualcomm, and Apple have produced powerful NPUs that enable running complex models on local devices.

2. Graphics Processing Units (GPUs)

Modern GPUs with parallel computing capabilities are very suitable for processing deep learning models. Companies like NVIDIA and AMD have provided low-power and powerful GPUs for Edge AI applications.

3. Field-Programmable Gate Arrays (FPGAs)

FPGAs enable hardware reconfiguration and are optimized for specific applications. This flexibility makes them suitable for implementing various models.

Development Frameworks

1. TensorFlow Lite

TensorFlow Lite is the lightweight and optimized version of TensorFlow for mobile and Edge devices. This framework enables running machine learning models with high efficiency and low energy consumption.

2. PyTorch Mobile

PyTorch Mobile is PyTorch's solution for Edge AI that enables deploying PyTorch models on mobile and Edge devices.

3. ONNX Runtime

ONNX Runtime is a cross-platform inference engine that enables running various models with optimal efficiency in Edge environments.

Model Optimization Techniques for Edge AI

Quantization

Quantization is a process that reduces the numerical precision of models to decrease their size and computational complexity. Instead of using 32-bit numbers, models can be run with 8-bit numbers or even less.

Benefits of Quantization:

- 75% reduction in model size

- Up to 4x increase in inference speed

- Reduced energy consumption

Pruning

This technique involves removing neurons or connections that have minimal impact on model performance. Pruning can be structured or unstructured.

Knowledge Distillation

In this method, a larger model (Teacher) transfers its knowledge to a smaller model (Student). The Student model can provide similar Teacher performance with less size and complexity.

Neural Architecture Search (NAS)

NAS uses artificial intelligence algorithms to find the best neural network architecture for specific applications. This method produces models optimized for Edge hardware constraints.

Practical Applications of Edge AI

Autonomous and Smart Vehicles

Advanced Driver Assistance Systems (ADAS) use Edge AI for object detection, automatic speed adjustment, and collision warnings. These systems must operate in real-time with minimal delay.

Benefits of Edge AI in vehicles:

- Immediate hazard detection

- Reduced network dependency

- Real-time camera image processing

- Instant decision-making for safety maneuvers

Security and Surveillance Systems

Smart cameras equipped with Edge AI can perform face recognition, suspicious behavior analysis, and object tracking in real-time. These capabilities are performed without needing to send video to a centralized server.

Security applications:

- Unauthorized intrusion detection

- People counting and traffic control

- Emotion and behavior analysis

- Automatic incident detection

Smart Internet of Things (IoT)

Edge AI in IoT sensors enables intelligent data processing and pattern recognition. These sensors can make complex decisions without human intervention.

Example applications:

- Real-time health monitoring

- Energy consumption optimization in buildings

- Smart agriculture

- Quality control in production lines

Medical Devices

Edge AI in the medical field enables faster diagnosis and treatment. Devices such as ECG, MRI, and ultrasound can perform initial diagnoses without needing a specialist.

Medical advantages:

- Immediate disease diagnosis

- Continuous patient monitoring

- Reduced human errors

- Access to medical services in remote areas

Challenges and Limitations of Edge AI

Hardware Limitations

Limited Processing Power: Edge devices typically have less processing power compared to centralized servers. This limitation necessitates precise model optimization.

Energy Consumption: Many Edge devices operate on batteries and require careful energy management. AI models must be designed to consume minimal energy.

Limited Memory: Limited storage space in Edge devices restricts the size of models that can be deployed.

Software Challenges

Development Complexity: Developing Edge AI applications requires specialized knowledge in model optimization and embedded systems programming.

Model Updates: Updating AI models in distributed devices is a major challenge, especially when devices have limited internet access.

Standardization: Lack of unified standards for Edge AI makes developing integrated solutions difficult.

Security Issues

Physical Attacks: Edge devices are exposed to physical attacks and need stronger security mechanisms.

Model Protection: AI models in Edge devices are vulnerable to theft or reverse engineering. Techniques such as model encryption are needed.

Solutions and Strategies for Overcoming Challenges

Model Optimization

AutoML for Edge: Using AutoML tools that automatically design models for Edge constraints.

Federated Learning: This approach enables model training without data transfer. Models are trained on local devices and only updated parameters are sent to the central server.

Edge-Cloud Hybrid: Combining Edge AI with Cloud Computing to leverage the benefits of both approaches.

Development Platforms

MLOps for Edge: Implementing development pipelines that include training, optimization, testing, and deploying models on Edge.

Container Technology: Using containers for easy deployment and management of Edge AI applications.

The Future of Edge AI: Trends and Predictions

Hardware Development

More Efficient Processors: New generation NPUs and AI processors with higher efficiency and lower energy consumption are under development.

Memory-Compute Integration: New architectures that integrate memory and processing to increase data access speed.

Novel Algorithms

Neuromorphic Computing: Inspiration from the human brain to design more efficient computing systems ideal for Edge AI.

Continual Learning: The ability for continuous learning in Edge devices without losing previous knowledge.

Standardization and Ecosystem

Open Standards: Development of open standards to facilitate cooperation between different devices and systems.

Edge AI as a Service: Providing Edge AI as a service that enables easier access for companies and developers.

Conclusion

Edge AI has created a revolution in the technology world that moves artificial intelligence processing from centralized data centers to local devices. This transition not only increases speed and efficiency but also ensures data security and privacy.

Despite challenges such as hardware limitations and development complexity, solutions and optimization techniques are evolving. The future of Edge AI paints a bright future with extensive applications in fields such as health, transportation, security, and the Internet of Things.

For success in this field, organizations must invest in Edge AI technologies, train specialized human resources, and develop appropriate infrastructure. Edge AI is not just a technology trend, but a necessity for an intelligent and connected future.

✨

With DeepFa, AI is in your hands!!

🚀Welcome to DeepFa, where innovation and AI come together to transform the world of creativity and productivity!

- 🔥 Advanced language models: Leverage powerful models like Dalle, Stable Diffusion, Gemini 2.5 Pro, Claude 4.5, GPT-5, and more to create incredible content that captivates everyone.

- 🔥 Text-to-speech and vice versa: With our advanced technologies, easily convert your texts to speech or generate accurate and professional texts from speech.

- 🔥 Content creation and editing: Use our tools to create stunning texts, images, and videos, and craft content that stays memorable.

- 🔥 Data analysis and enterprise solutions: With our API platform, easily analyze complex data and implement key optimizations for your business.

✨ Enter a new world of possibilities with DeepFa! To explore our advanced services and tools, visit our website and take a step forward:

Explore Our ServicesDeepFa is with you to unleash your creativity to the fullest and elevate productivity to a new level using advanced AI tools. Now is the time to build the future together!