Blogs / Recurrent Neural Networks (RNN): Architecture, Applications, and Challenges

Recurrent Neural Networks (RNN): Architecture, Applications, and Challenges

Introduction

Recurrent Neural Networks (RNNs) are an advanced architecture in the field of deep learning designed to process sequential data and time series. Unlike traditional neural networks that treat each input independently, RNNs use internal memory to learn dependencies and relationships across data points in sequence. This capability makes them well suited for applications such as natural language processing (NLP), speech recognition, and time series analysis.

In this article, we introduce the RNN architecture, its applications, advantages, disadvantages, and challenges.

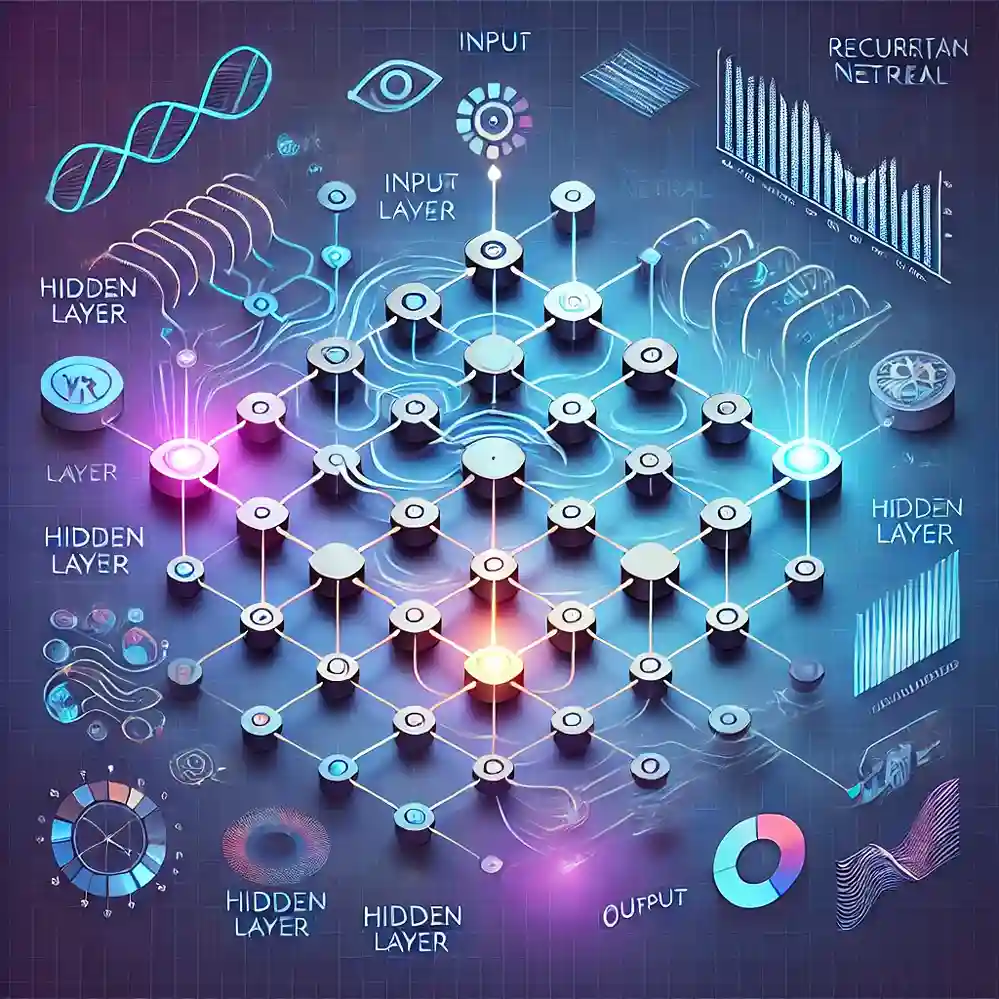

RNN Architecture

1. Basic RNN Structure

RNNs are designed to retain information from previous inputs when processing new ones via a recurrent loop. At each time step, an RNN computes its output based on the current input and its previous hidden state.

The core RNN update is:

\(h_t = f\bigl(W_h \cdot h_{t-1} + W_x \cdot x_t + b\bigr)\)

where:

\(h_t = f\bigl(W_h \cdot h_{t-1} + W_x \cdot x_t + b\bigr)\)

where:

- \(h_t\): hidden state at time \(t\)

- \(h_{t-1}\): hidden state at time \(t-1\)

- \(x_t\): input at time \(t\)

- \(W_h, W_x\): weight matrices

- \(b\): bias vector

- \(f\): activation function

2. RNN Variants

Based on input/output patterns, RNNs fall into:

- One-to-One: for non-sequential tasks (e.g., image classification).

- One-to-Many: for sequence generation (e.g., text or music).

- Many-to-One: for sequence classification (e.g., sentiment analysis).

- Many-to-Many: for sequence-to-sequence tasks (e.g., machine translation).

3. Vanishing Gradient Problem

A key issue in training vanilla RNNs is the vanishing gradient, which prevents learning long-term dependencies. Architectures like LSTM and GRU address this challenge.

Advantages of RNNs

1. Sequential Data Processing

RNNs excel at learning temporal and sequential relationships, making them highly effective for time series analysis and NLP.

2. Weight Sharing

With shared weights across time steps, RNNs have fewer parameters than fully connected networks, reducing computational complexity.

3. High Flexibility

RNNs can be applied to a wide range of tasks such as prediction, sequence generation, and translation.

RNN Applications

1. Natural Language Processing (NLP)

RNNs are fundamental in NLP for tasks like text analysis, machine translation, text generation, and sentiment detection.

2. Speech Recognition

RNNs power speech-to-text, analyzing complex audio patterns for accurate transcription.

3. Time Series Analysis

Used to forecast stock prices, weather data, and sales trends, leveraging past patterns to predict the future.

4. Recommender Systems

RNNs drive personalized recommendations in streaming services, e-commerce, and content platforms by modeling user behavior over time.

5. Robotics and Control

RNNs control dynamic systems and robots, learning temporal dependencies to improve real-time decision-making.

Challenges and Limitations of RNNs

1. Vanishing and Exploding Gradients

RNNs can suffer from vanishing or exploding gradients, hindering effective training.

2. Data Requirements

RNNs need large amounts of high-quality sequential data; insufficient data degrades performance.

3. Computational Complexity

Training RNNs is time-consuming and resource-intensive, requiring significant computational power.

4. Long-Term Dependency Learning

Simple RNNs struggle with extremely long-term dependencies; LSTM/GRU mitigate but do not fully eliminate this limitation.

Improving RNNs

Techniques to enhance RNN performance include:

- Using LSTM and GRU: Specifically designed to combat vanishing gradients.

- Gradient Clipping: Prevents exploding gradients during training.

- Regularization Techniques: Methods like Dropout and Batch Normalization improve generalization.

Conclusion

Recurrent Neural Networks (RNNs) remain a powerful deep learning architecture for modeling temporal dependencies in sequential data. While challenges like vanishing gradients and high computational demands persist, advanced variants such as LSTM and GRU, along with optimization techniques, ensure RNNs stay central to AI and deep learning applications.

✨

With DeepFa, AI is in your hands!!

🚀Welcome to DeepFa, where innovation and AI come together to transform the world of creativity and productivity!

- 🔥 Advanced language models: Leverage powerful models like Dalle, Stable Diffusion, Gemini 2.5 Pro, Claude 4.5, GPT-5, and more to create incredible content that captivates everyone.

- 🔥 Text-to-speech and vice versa: With our advanced technologies, easily convert your texts to speech or generate accurate and professional texts from speech.

- 🔥 Content creation and editing: Use our tools to create stunning texts, images, and videos, and craft content that stays memorable.

- 🔥 Data analysis and enterprise solutions: With our API platform, easily analyze complex data and implement key optimizations for your business.

✨ Enter a new world of possibilities with DeepFa! To explore our advanced services and tools, visit our website and take a step forward:

Explore Our ServicesDeepFa is with you to unleash your creativity to the fullest and elevate productivity to a new level using advanced AI tools. Now is the time to build the future together!